Table of contents

- Introduction

- Bloom Filters: A Probabilistic Approach

- Mechanism Behind Bloom Filters

- Mathematical Analysis and Error Rates

- Practical Applications

- Master/Slave Architecture for Database Replication

- Addressing Single Points of Failure

- Types of Replication: Synchronous vs. Asynchronous

- Master/Slave Architecture Dynamics

- Mitigating Split-Brain Problem and Sharding

- Distributed Consensus Protocols

- Benefits of Master/Slave Architecture

- Optimizing Database Writes with LSM Trees

- Fast Writes with Linked List

- Introduction to LSM Trees

- Log Compaction for Efficient Reads

- Hybrid Approach and Bloom Filters

- Compaction Strategies

- Conclusion

- Database Migrations - A Comprehensive Guide

- Reasons for Database Migrations

- Planning a Database Migration

- Types of Database Migrations

- Database Migration Tools

- Steps in the Migration Process

- Challenges and Best Practices

- Post-Migration Activities

- CONCLUSIONS:-

THE LINK TO THE PDF :-https://jumpshare.com/v/C2YZHPLEw1V5QDUrLrCk

Efficient String Comparison with Bloom Filters

Introduction

In the dynamic world of computer programming, optimizing search efficiency without compromising storage resources is a constant challenge. One solution that has gained prominence is the use of Bloom filters. This blog explores the intricacies of Bloom filters and their applications, particularly in scenarios like browsers or social media platforms.

Bloom Filters: A Probabilistic Approach

Bloom filters leverage the power of hashing to expedite string comparison. By using multiple hash functions to set bits in an array, they create a compact representation of searched strings. The video explains the probabilistic nature of Bloom filters, emphasizing how they provide approximate results with a low error rate.

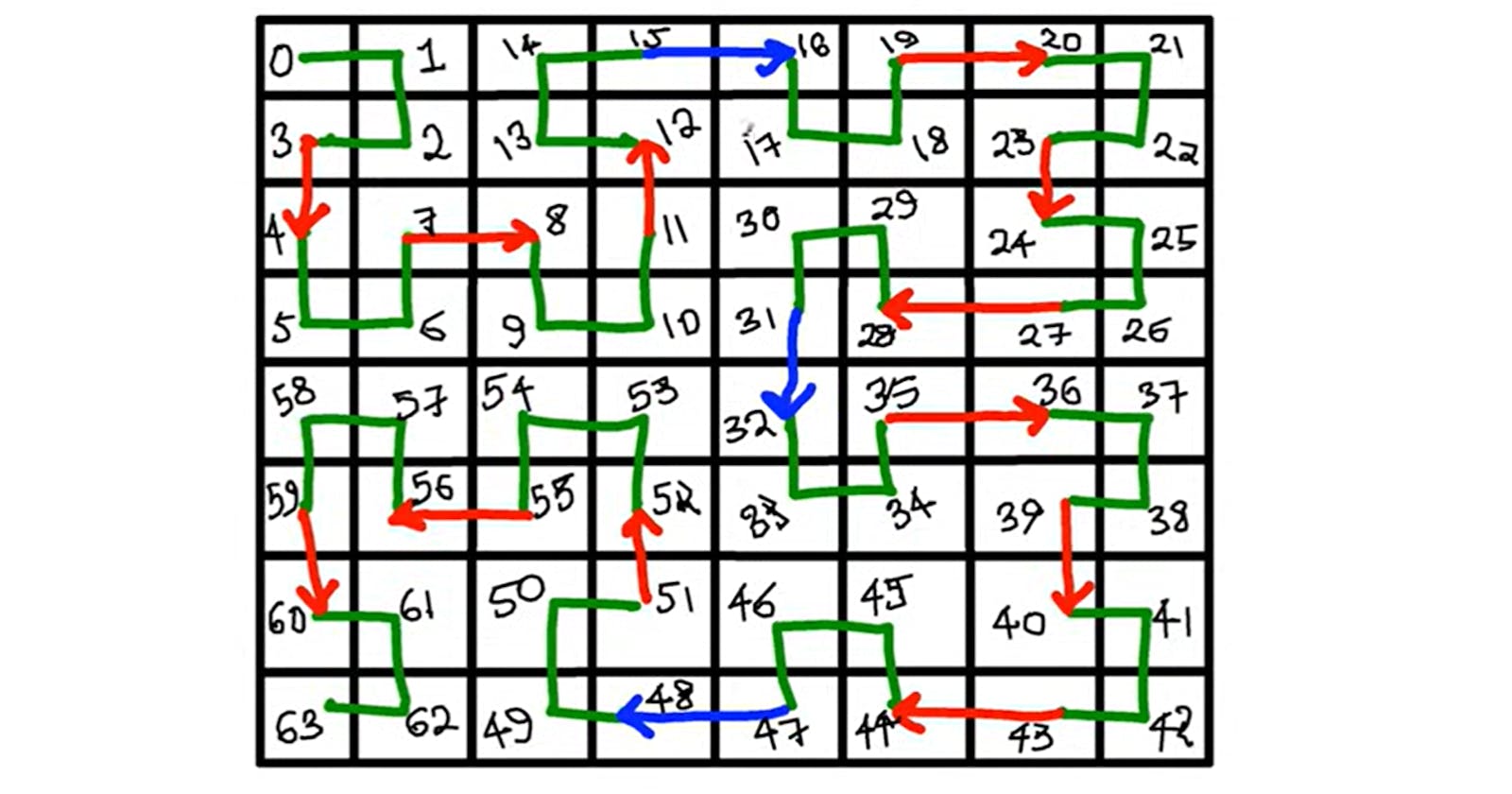

Mechanism Behind Bloom Filters

The heart of Bloom filters lies in setting and checking bits based on hash values. When a new string is encountered, the algorithm checks if the same bits are set, indicating a potential prior search. The video introduces the possibility of using multiple layers of Bloom filters to enhance accuracy.

Mathematical Analysis and Error Rates

The video dives into the mathematical analysis, detailing probability calculations and error rates. It sheds light on the optimal number of hash functions required for efficient Bloom filter performance.

Practical Applications

The blog concludes with practical applications of Bloom filters in browsers and social media platforms. Their role in caching and enhancing search efficiency is highlighted, showcasing how Bloom filters contribute to a seamless user experience.

Master/Slave Architecture for Database Replication

Addressing Single Points of Failure

In the realm of database management, the single point of failure associated with a sole database prompts the need for innovative solutions. This blog explores the master/slave architecture in the context of four cell phones, a load balancer, and servers, addressing the challenges of data replication.

Types of Replication: Synchronous vs. Asynchronous

The blog elucidates the two main types of replication: synchronous, ensuring consistent data at the cost of higher load, and asynchronous, allowing for less resource-intensive operations but risking data inconsistencies.

Master/Slave Architecture Dynamics

Delving into the master/slave architecture, the blog describes how the master database sends update commands to the slave for data synchronization. It introduces the concept of peer-to-peer relationships and master/master architecture to mitigate potential issues.

Mitigating Split-Brain Problem and Sharding

The split-brain problem is introduced, and the solution involves adding a third node to mitigate issues during network partitions. Sharding, the division of responsibilities among nodes, is also discussed as a strategy to reduce the impact of failures.

Distributed Consensus Protocols

Briefly touching on distributed consensus protocols like Two-Phase Commit, MVCC, and Saga, the blog emphasizes their role in ensuring agreement among nodes in a distributed system.

Benefits of Master/Slave Architecture

The blog outlines the benefits of master/slave architecture, including data backup, scalability, and load balancing through the addition of multiple slaves.

Optimizing Database Writes with LSM Trees

Fast Writes with Linked List

This section explores the concept of optimizing database writes using a log-structured merge tree (LSM). It begins with the utilization of a linked list for fast write operations, emphasizing the constant time insertion of nodes.

Introduction to LSM Trees

The blog introduces the log-structured merge tree (LSM) as a mechanism for efficient write operations. While logs provide swift writes, they also present challenges with slow reads due to sequential scanning.

Log Compaction for Efficient Reads

To address the trade-off between fast writes and slow reads, the blog delves into log compaction within the LSM structure. Merging sorted chunks of data in the background enhances read performance, albeit with additional memory usage.

Hybrid Approach and Bloom Filters

The blog advocates for a hybrid approach, combining a linked list with a sorted array (log and B+ tree) to optimize both write and read speeds. It also introduces the use of Bloom filters on sorted arrays to expedite search queries.

Compaction Strategies

A detailed discussion on strategies for merging sorted arrays and creating Bloom filters with larger sizes is presented. The blog emphasizes the importance of careful decision-making based on block sizes.

Conclusion

In summary, the LSM tree emerges as a powerful solution, balancing fast writes and reads through a clever combination of data structures and optimization strategies.

Database Migrations - A Comprehensive Guide

Reasons for Database Migrations

Database migrations are explored in-depth, covering reasons such as upgrading database versions, changing DBMS, data center relocation, and schema restructuring to adapt to evolving business requirements.

Planning a Database Migration

The blog details the crucial steps in planning a migration, including assessment, backup creation, ensuring compatibility, and understanding potential challenges.

Types of Database Migrations

Three main types of migrations – schema, data, and application code – are elucidated, each with its specific focus on modifying the database structure, transferring data, and adjusting application code.

Database Migration Tools

A variety of tools to streamline the migration process, such as AWS Database Migration Service and open-source tools like Flyway and Liquibase, are discussed, highlighting their functionalities for schema comparison and data synchronization.

Steps in the Migration Process

The blog breaks down the migration process into essential steps, including schema creation, data transfer, testing, and verification, emphasizing the importance of a well-defined rollback plan.

Challenges and Best Practices

Addressing challenges like downtime, data consistency, and the importance of communication with stakeholders, the blog outlines best practices for successful database migrations.

Post-Migration Activities

The blog concludes with post-migration activities such as performance tuning and monitoring to ensure ongoing stability and optimal database performance.

In conclusion, this comprehensive guide serves as a valuable resource for navigating the intricacies of database migrations.

CONCLUSIONS:-

Well, that's a wrap on our exploration through the tech terrain! We've delved into the intricacies of Bloom filters, those nifty tools optimizing string comparison without bogging down on storage. From hashing to multiple layers, they've got the search game on point.

Then we cruised through the master/slave architecture, tackling the drama of a single-point failure in databases. Replication, split-brain problems, and sharding – it's like orchestrating a database symphony with cell phones and servers.

And oh, the log-structured merge tree (LSM) came into play, optimizing database writes like a maestro. Linked lists, sorted arrays, and Bloom filters stepped up, giving us a sweet spot between swift writes and efficient reads.

Last but not least, we took a stroll through the world of database migrations. Upgrading versions, switching DBMS, and restructuring schemas – it's like moving houses, but for data. The planning, the types of migrations, the tools – it's a whole migration party.

In this ever-evolving tech saga, whether you're fine-tuning search efficiency, fortifying data resilience, or navigating the migration maze, the goal remains the same: crafting systems that are efficient, reliable, and adaptable. So here's to staying tech-savvy and embracing the ever-changing landscape of bits and bytes! Cheers to the tech journey ahead! 🚀

PDF LINK FOR THE ABOVE -https://jumpshare.com/v/C2YZHPLEw1V5QDUrLrCk